Data cleaning and preprocessing are crucial steps in the data analysis process that involve transforming raw data into a clean and structured format suitable for analysis.

Here are some techniques commonly used for data cleaning and preprocessing:

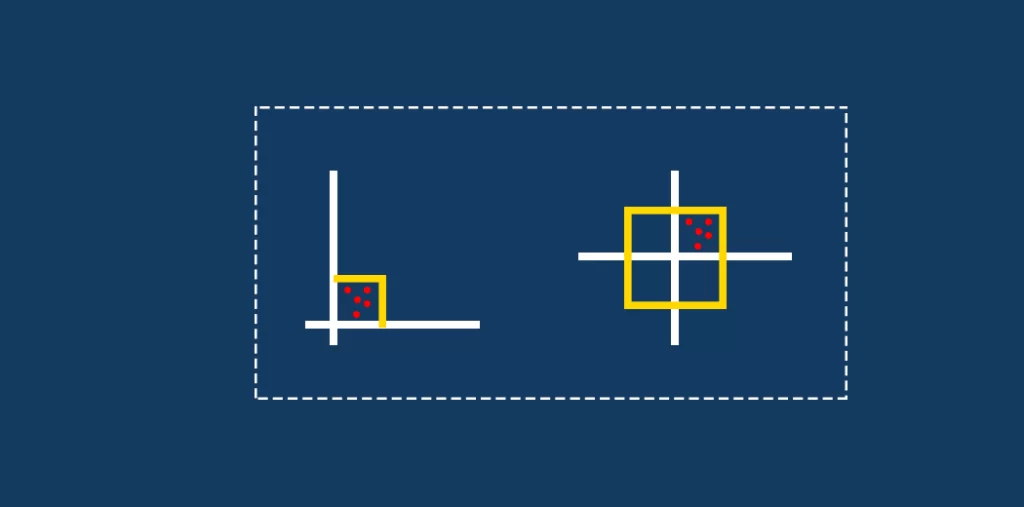

Normalization and Scaling

Normalization and scaling are techniques used to preprocess numerical data to bring it into a standardized range. They help in making features more comparable and preventing certain features from dominating others in machine learning models.

Here’s an overview of both techniques:

Normalization:

Aim:

Normalization scales feature between 0 and 1.

Benefits:

- Suitable for algorithms that require inputs on a similar scale.

- Helps when features have different units or scales.

Example:

Suppose you have a feature like age ranging from 0 to 100. After normalization, the age values would be between 0 and 1.

Standardization:

- Aim: Standardization transforms data to have a mean of 0 and a standard deviation of 1.

Benefits:

- Suitable for algorithms like PCA, clustering, and linear regression.

- Works well when data is normally distributed.

Example:

If you have a feature with a mean of 50 and a standard deviation of 10, after standardization, the new mean will be 0, and the standard deviation will be 1.

When to Use Each:

Use Normalization when:

- Features have different ranges.

- The algorithm used requires input values to be within a specific range (e.g., neural networks, image processing).

Use Standardization when:

- Features are normally distributed.

- Linear models, SVMs, or clustering algorithms are applied.

Considerations:

- Always apply normalization or standardization after splitting data into training and test sets. Fit the scaler on the training data and transform both training and test data separately to prevent data leakage.

- The choice between normalization and standardization depends on the nature of the data and the requirements of the model being used.

Handling Inconsistent Data and Typos

Handling inconsistent data and typos is a critical part of data cleaning to ensure the accuracy and reliability of the dataset.

Here are some techniques and approaches to manage inconsistent data and typos:

Standardization:

Convert data into a consistent format. For example:

- Text Data: Convert to lowercase or uppercase to avoid variations due to case sensitivity.

- Dates and Time: Ensure a consistent date format across the dataset.

- Addresses or Locations: Standardize abbreviations, spelling, and formatting.

Use of Regular Expressions:

- Regular expressions (regex) can help identify and correct patterns or inconsistencies in textual data.

For instance:

- Identify and correct misspelled words by finding patterns or similarities.

- Extract or replace specific patterns in text data.

Leave a Reply